- JooBee's Newsletter

- Posts

- #52 JooBee's newsletter

#52 JooBee's newsletter

TL;DR

👓 Read this before measuring AI adoption in performance reviews

🎭 Brilliant Jerk or Friendly Incompetent? Which are you rewarding?

🎧 Listen to the newsletter here

This newsletter edition is brought to you by Zelt 💛

| HR leader, are you seen as strategic or stuck in admin?I’ve created the Strategic HR Readiness Quiz to help you find out where you stand and get a personalised report with clear actions to step up your influence and impact. |

Question: : I want AI adoption to be included in Performance Reviews. We need to adapt to change, this is important to the business.

Planning to measure AI adoption in Performance Reviews? Read this first

Founders keep telling me the same thing:

“I want AI adoption included in performance reviews. We need to adapt to change.”

HR leaders are also saying the same:

“My founder wants this. We want to keep up with the times. People need to use AI more.”

Okay, fine. But by now, you already know what I’m going to ask: Why is this important?

And that’s when things start to wobble. Most responses are vague. Trend-driven. FOMO-fuelled.

“We need to keep up.”

“Everyone should be using it.”

“It’s the future.”

Yes, yes…but what do you actually want AI adoption to achieve?

[**Cue tumbleweeds🌵🌬️**]

And here we are, back at the same old problem with performance reviews. We measure what people do…not whether it drives results.

Measure what matters: Impact, not busywork

When I press harder, the answers finally get clearer:

“CS can expand accounts by flagging churn and upsells.”

“Sales can grow pipeline with better targeting and outreach.”

“Tech can improve quality through smarter incident management.”

“We can hit goals without extra headcount or cost.”

Now that’s impact and performance. AI isn’t the point — business outcomes are.

Know your goals: Effort vs. outcome

So let’s talk about goals, there are broadly 2 kinds:

💪 Effort goals (process): hours worked, tools adopted, skills developed, tasks/projects/activities completed, etc.

📈 Outcome goals (results): revenue delivered, speed of execution, customers retained, risk mitigated, results shipped, etc.

Here’s the critical difference:

Effort = lead indicator → what went in

Outcome = lag indicator → what came out

If you listen closely, you’ll hear business leaders talk a lot about lead and lag indicators.

Let’s illustrate this with an example.

Netflix

Let’s say Netflix wants to increase customer renewals. Renewal is a lag indicator — we only know the outcome after it happens.

But they track lead indicators like viewing hours, watch frequency and interaction levels. Because if those drop, they have time to act before renewal tanks. That’s the value of lead data.

However, If we are really honest with ourselves, business success — even survival — ultimately hinges on renewals, not hours streamed.

So why am I dragging you through all these distinctions? 😅

Because we’re making the same mistake (again!) with AI in performance reviews. We over-index on effort:

Are people “using AI”?

“Did they attend the AI lunch & learn?”

“How many tools have they tried?”

Then comes performance review time and everyone’s misaligned:

Founders expect results.

Managers argue and point to effort and activities.

HR gets stuck in the middle, again.

It’s the performance equivalent of installing a treadmill in the office… and wondering why no one has run a marathon. 🏃

Don’t repeat the mistake

If you want to include AI in reviews, ask the right question:

“Are we measuring effort → adoption? Or outcome → results?”

Both have their value. Just don’t confuse one for the other.

If you are going to reward for results, AI adoption is not the result. Impact is.

Brilliant jerk? Friendly incompetent? Who are you rewarding

In the article above, I talked about the trap of measuring effort vs. outcome without clear separation. On paper, simple. In practice? Messy.

If your company uses a single performance rating, here’s what happens:

One manager scores based on outcomes.

Another scores based on effort.

And……employees talk.

They compare. They speculate. One thinks they’re being evaluated on delivery. The other thinks it’s all about attitude. Inconsistency breeds confusion, frustration. Frustration turns into, “Why did they get a raise?”

And that leads to the worst thing in any performance system: perceived unfairness.

Is one better than the other: Effort vs. outcome

Nope. And that’s exactly the point.

As I always say, strategy is a choice — not a default, not borrowed ‘best practices.’

The key is to be intentional. Decide what matters to your business, then back it with structure and consistency.

I’ll share how I tackled the challenge. Not as a prescription, more for the rationale that shape our choices.

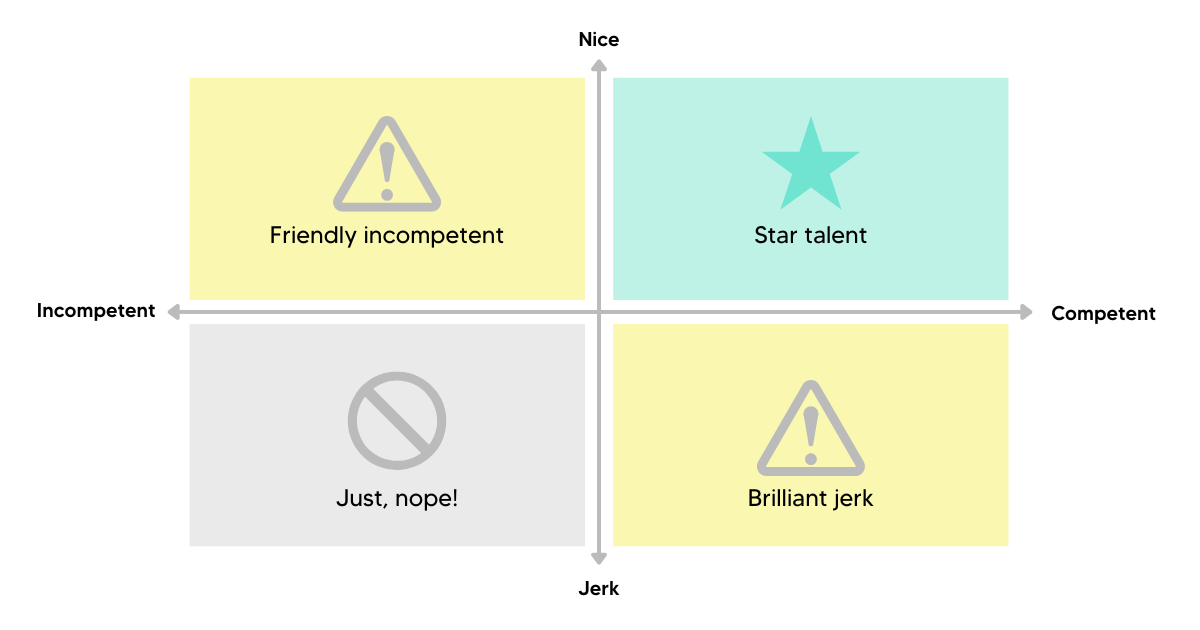

The Brilliant Jerk vs. Friendly Incompetent

We’ve all seen it:

The brilliant jerk who delivers results but poisons the team.

The friendly incompetent who everyone likes but is not delivering.

Neither is good for the long run. One undermines culture. The other drags down performance.

But this is what happens when no one is aligned on what “good performance” actually looks like. And if you’ve only got one rating, managers default to their personal biases:

Results-first managers defend the jerk.

Relationship-first managers protect the nice-but-ineffective one.

Over time, both types erode team standards.

And when that happens, I’ve seen it play out the same way: The real talent (the ones who are both good and good to work with) leave…and the company is left with both ends of the spectrum.

“Put your money where your mouth is”

That’s exactly what I told our exec team when I pitched the change.

If we truly believe performance is about what you deliver and how you deliver it, then we need to evaluate—and reward—both. Explicitly.

So that’s what we did.

Two performance ratings. Not one

We separated performance into two distinct ratings:

Outcome → how well they deliver against role expectations.

Effort → how well they demonstrate values and behaviours (the “how”).

We used a 7-point scale (I’ve tested many scales and my preference is odd numbers. But that’s another newsletter — email me if you want the rationale). A score of 4 means they are meeting 100% of expectations; either side reflected above or below.

We reward what is important to us

We made a deliberate call: we reward performance.

But in our business, performance = outcome + effort.

That means salary reviews are only triggered when both are met. No one coasts on charm. No one gets rewarded for results at the cost of culture.

Example:

Outcome (role expectations) = 6/7

Evidence: delivered quarterly revenue target with 10% uplift.Effort (values/behaviours) = 3/7

Evidence: consistent complaints from peers about collaboration, ignored agreed team processes.High results, low values = no salary review

Of course, as a business, we expect managers to act. Reset expectations. Build a clear development plan. Support the individual to course-correct.

It’s not about what others value. It’s about what you value.

Performance reviews only work if they reflect what your business truly cares about.

I’ve been asked, “What if we value results more…like 75% outcomes, 25% behaviours?”

Great. Then make that clear. Align your process to it.

When you separate the measures, you make expectations transparent. And that means fewer surprises, more consistency and a fairer system for everyone. #nosurprises

Here’s the one lesson I’ve learned after designing countless performance reviews:

“People don’t hate performance reviews.

They hate inconsistent decisions that feel unfair.”

You can now ‘LISTEN’ to the newsletterI’ve turned my newsletter into audio, voiced by AI podcasters. It’s in beta, so give it a listen and tell me what you think! |  |

Scaling your start-up?

Let’s make sure your leadership, people and org are ready. Here are 3 ways I can help:

🔗 LinkedIn | 🌎 JooBee’s blog | 🤝 Work with me